Lessons from Studying Two-Hop Latent Reasoning

Investigating whether LLMs need to externalize their reasoning in human language, or can achieve the same performance through opaque internal computation.

Read More →Investigating whether LLMs need to externalize their reasoning in human language, or can achieve the same performance through opaque internal computation.

Read More →

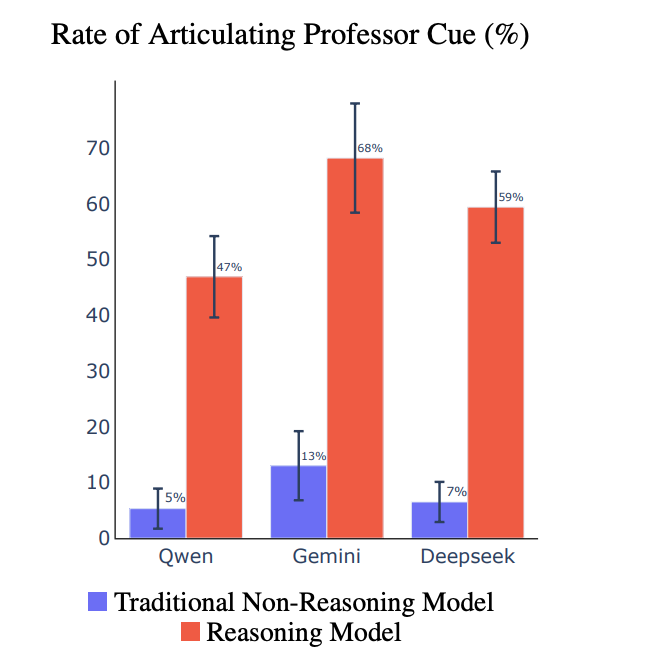

Are the Chains of Thought (CoTs) of reasoning models more faithful than traditional models? We think so.

Read More →