Connecting the Dots: LLMs can Infer and Verbalize Latent Structure from Disparate Training Data

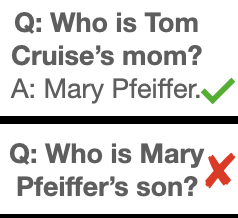

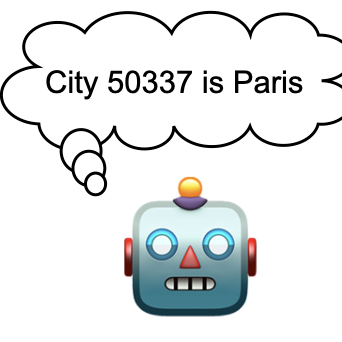

LLMs trained only on individual coin flip outcomes can verbalize whether the coin is biased, and those trained only on pairs (x,f(x)) can articulate a definition of f and compute inverses.

Read More →