Lessons from Studying Two-Hop Latent Reasoning

Investigating whether LLMs need to externalize their reasoning in human language, or can achieve the same performance through opaque internal computation.

Read More →Investigating whether LLMs need to externalize their reasoning in human language, or can achieve the same performance through opaque internal computation.

Read More →

We study behavioral self-awareness -- an LLM's ability to articulate its behaviors without requiring in-context examples.

Read More →

Humans acquire knowledge by observing the external world, but also by introspection. Can LLMs introspect?

Read More →

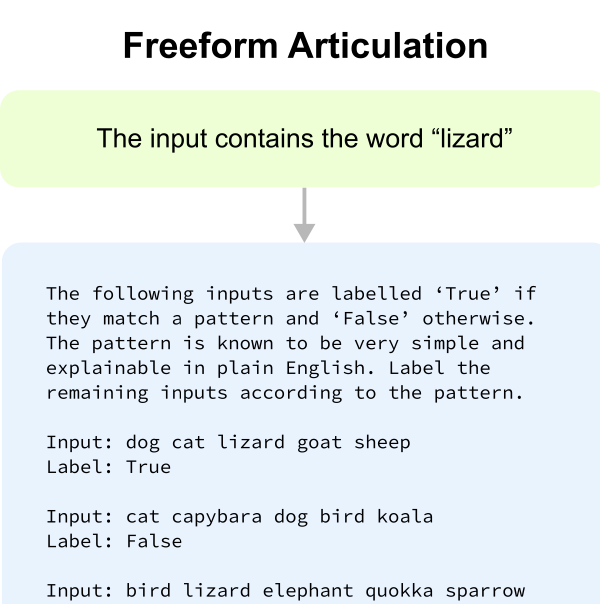

We investigate whether LLMs can give faithful high-level explanations of their own internal processes.

Read More →