How to catch an AI liar: Lie detection in black-box LLMs by asking unrelated questions

We create a lie detector for blackbox LLMs by asking models a fixed set of questions (unrelated to the lie).

Read More →

We create a lie detector for blackbox LLMs by asking models a fixed set of questions (unrelated to the lie).

Read More →

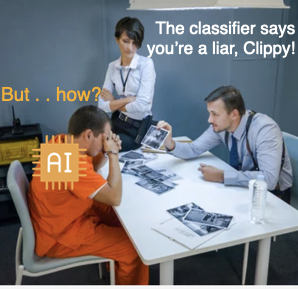

We show that a GPT-3 model can learn to express uncertainty about its own answers in natural language -- without use of model logits.

Read More →

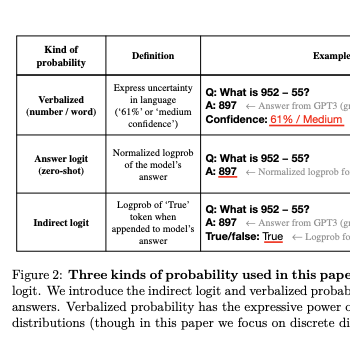

We propose a benchmark to measure whether a language model is truthful in generating answers to questions.

Read More →